Parallel Computing Explained: How It Powers Modern Technology in 2025

Introduction

Parallel Computing is a form of computation in which many calculations are carried out simultaneously. It uses multiple compute resources to solve a computational problem using multiple CPUs. A problem is broken into discrete parts that can be solved concurrently. Each part is further broken down to a series of instructions.

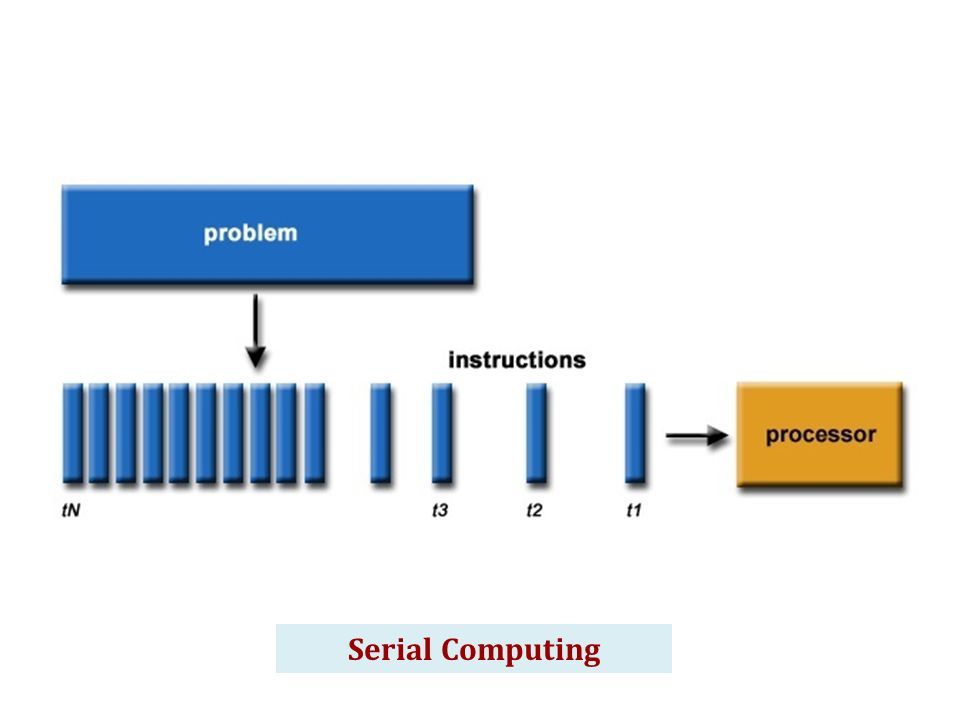

What is Serial Computing?

- Traditionally, software has been written for serial computation to be run on a single computer having a single Central Processing Unit (CPU).

- A problem is broken into a discrete series of instructions.

- A problem is broken into a discrete series of instructions.

- A problem is broken into a discrete series of instructions.

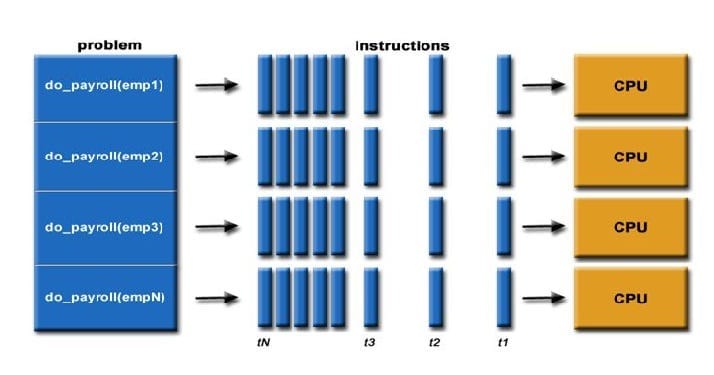

What is Parallel Computing?

- Parallel Computing is a form of computation in which many calculations are carried out simultaneously.

- In the simplest sense, It uses multiple compute resources to solve a computational problem using multiple CPUs.

- A problem is broken into discrete parts that can be solved concurrently.

- Each part is further broken down to a series of instructions.

- Instructions from each part execute simultaneously on different CPU’s.

Why use Parallel Computing ?

Main Reasons :

- The compute resources can include single computer with multiple processors.

- Save Time and Money.

- Solve Larger Problems Eg: Web Engines/databases Processing millions of transactions per second.

- Use of non Local Resources.

Limitations of Serial Computing

- Limits to serial computing – both physical and practical reasons pose significant constraints to simply building ever faster serial computers.

- Transmission speeds – the speed of a serial computer is directly dependent upon how fast data can move through hardware. Absolute limits are the speed of light (30 cm/nanosecond) and the transmission limit of copper wire (9 cm/nanosecond). Increasing speeds necessitate increasing proximity of processing elements.

- Limits to miniaturization – processor technology is allowing an increasing number of transistors to be placed on a chip. However, even with molecular or atomic-level components, a limit will be reached on how small components can be.

- Economic limitations – it is increasingly expensive to make a single processor faster. Using a larger number of moderately fast commodity processors to achieve the same (or better) performance is less expensive.

also read High Performance Computing (HPC) Simplified 2025: Benefits, Use Cases & Impact

Flynn’s Classical Taxonomy

- There are different ways to classify parallel computers. One of the more widely used classifications, in use since 1966, is called Flynn’s Taxonomy.

- Flynn’s taxonomy distinguishes multi-processor computer architectures according to how they can be classified along the two independent dimensions of Instruction and Data. Each of these dimensions can have only one of two possible states Single or Multiple.

Single Instruction, Single Data (SISD)

- A serial (non-parallel) computer.

- Single instruction only one instruction stream is being acted on by the CPU during any one clock cycle.

- Single data only one data stream is being ed as input during any one clock cycle Deterministic execution.

- This is the oldest and until recently, the most prevalent form of computer.

- Examples most PCs, single CPU workstations and mainframes.

Single Instruction, Multiple Data (SIMD)

- A type of parallel computer.

- Single instruction All processing units execute the same instruction at any given clock cycle Multiple data Each processing unit can operate on a different data element.

- This type of machine typically has an instruction dispatcher, a very high-bandwidth internal network, and a very large array of very small-capacity instruction units.

- Best suited for specialized problems characterized by a high degree of regularity,such as image processing.

- Synchronous (lockstep) and deterministic execution.

- Two varieties Processor Arrays and Vector Pipelines.

- Examples

- Processor Arrays Connection Machine CM-2, Maspar MP-1, MP-2 Vector Pipelines IBM 9000, Cray C90, Fujitsu VP,NEC SX-2, Hitachi S820.

Multiple Instruction, Single Data (MISD)

- A single data stream is fed into multiple processing units.

- Each processing unit operates on the data independently via independent instruction

streams. - Few actual examples of this class of parallel computer have ever existed. One is the

experimental Carnegie-Mellon C.mmp computer (1971). - Some conceivable uses might be multiple frequency filters operating on a single

signal stream multiple cryptography algorithms attempting to crack a single coded message.

Multiple Instruction, Multiple Data (MIMD)

- Currently, the most common type of parallel computer. Most modern computers fall into this category.

- Multiple Instruction every processor may be executing a different instruction stream

- Multiple Data every processor may be working with a different data stream

- Execution can be synchronous or asynchronous, deterministic or non-deterministic

- Examples most current supercomputers, networked parallel computer “grids” and multi-processor SMP computers – including some types of PCs.

Parallel computing use cases

Today, commercial applications are providing an equal or greater driving force in the development of faster computers. These applications require the processing of large amounts of data in sophisticated ways. Example applications include

- parallel databases, data mining

- oil exploration

- web search engines, web based business services

- computer-aided diagnosis in medicine

- management of national and multi-national corporations

- advanced graphics and virtual reality,particularly in the entertainment industry

- weather and climate

- chemical and nuclear reactions

- biological, human genome

- geological, seismic activity

- mechanical devices – from prosthetics to spacecraft

- electronic circuits

- manufacturing processes

Top companies that provides deep knowledge on parallel computing

| Companies | Topic |

| Intel | Parallel Computing Overview |

| NVIDIA | Parallel Computing & GPU Acceleration |

| IBM | Parallel Processing Concepts |

| Wikipedia | Parallel Computing Overview |

Conclusion

In this we cover what is serial computing, what is parallel computing, why use parallel computing, limitations of serial computing, flynn’s classical taxonomy, parallel computing use cases.

Parallel Computing Explained: How It Powers Modern Technology

Parallel Computing vs Serial Computing: Key Differences & Benefits

Mastering Parallel Computing: Unlocking Faster, Smarter Technology

What is Parallel Computing? Examples, Benefits & Real-World Uses

Parallel Computing Explained: How It Powers Modern Technology in 2025

Parallel Computing for AI and Big Data